Explainable Artificial Intelligence for Hate Speech Detection

Researchers are finding innovative ways to use artificial intelligence (AI) to address various challenges. One example is using AI for hate speech detection on social media. This could help moderate the large volume of interactions people have, ideally reducing the negative effects on mental health for users. Using explainable artificial intelligence, MDPI authors test whether this is plausible.

What is explainable artificial intelligence?

Most AI tools are black box systems, meaning they provide outputs without giving explanations about how it produces them. This means users are restricted from gaining any understanding of how certain AI tools produce their outputs, sometimes even those who created the tools.

In contrast, explainable artificial intelligence (XAI) methods provide explanations about why it produces certain outputs and how it does them. Advocates for XAI tend to cite two main benefits:

- Transparency: XAI helps users validate whether outputs are produced fairly and accurately. This can help build trust in AI tools.

- Collaboration: XAI can be supported by human reasoning to further tweak algorithms and increase the potential uses for the tools.

In fields like medicine or law, where the outputs have very serious and important consequences, these two benefits are vital.

Social media and mental health

Social media enables us to connect with people anywhere in the world at any time. People share their social lives, post their creative work, and come together for social causes.

However, the negative mental health effects of social media are being increasingly recognised. Effects include the following:

- Addiction.

- Anxiety.

- Depression.

- Decrease in attention span.

- Sleep disturbance.

Recommendations on how to address these issues tend to revolve around making lifestyle changes and taking breaks from social media.

Hate speech on social media

Hate speech is rife on social media. Detecting it is difficult, however, due to many interactions online being grammatically incorrect, the use of slang terms or context-specific phrases, and the sheer volume of text on social media being generated at any moment.

The United Nations defines hate speech as the following:

Any kind of communication in speech, writing or behaviour, that attacks or uses pejorative or discriminatory language with reference to a person or a group on the basis of who they are, in other words, based on their religion, ethnicity, nationality, race, colour, descent, gender or other identity factor.

Hate speech can also be conveyed through images, memes, and symbols, which further complicates detecting it. Preventing it is a complicated issue, as it is influenced by complex, constantly shifting emotional, political, and social factors.

Hence, MDPI authors have attempted to use explainable artificial intelligence to detect hate speech on social media platforms.

Detecting hate speech using explainable artificial intelligence

An Editor’s Choice article in Algorithms explores the literature on using XAI for hate speech detection on social media. The authors found that hate speech detection is being explored using different AI approaches, but not using XAI specifically. They outline the goals of their study:

The main goal and the intended contribution of this paper are interpretating and explaining decisions made by complex artificial intelligence (AI) models to understand their decision-making process in hate speech detection.

The paper’s primary aim, then, is not only detecting hate speech but understanding how AI tools can do this to further improve them.

We’ll outline the methods and findings of the article, which you can explore in full here.

Training explainable artificial intelligence tools

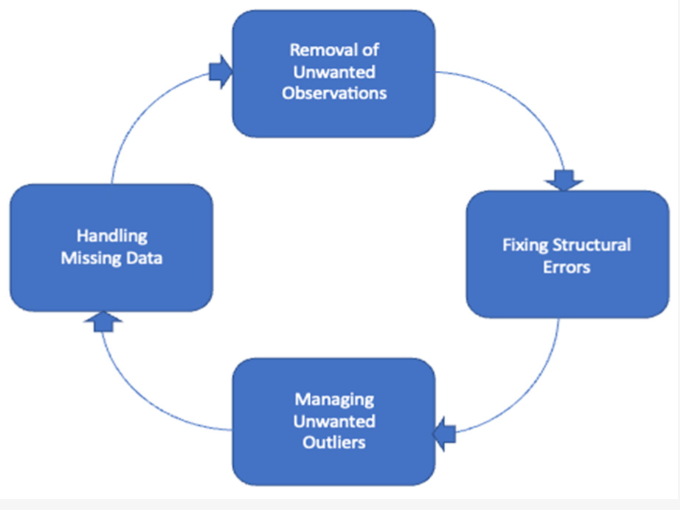

The authors used two datasets. The first is the Google Jigsaw dataset, which includes user discussions from English Wikipedia, and the second is the HateXplain dataset, which includes over 20,000 examples of hateful, offensive, and normal text from Twitter and Gab.A key step is cleaning the data. This involves removing any incorrect or inconsistent information, improving data quality, and correcting any structural errors. Then, they performed a series of other steps that enable the tools to process and sort the information in the datasets, including converting the data into numbers.

This was all in preparation for the main goal of the article: understanding how different models detect hate speech.

Can XAI tools detect hate speech?

Overall, the authors tested the accuracy, precision, and F1 score for the different models, finding high accuracies:

- Long Short-Term Memory (LSTM) had the highest accuracy at 97.6%.

- Multinomial Naïve Bayes followed behind with an accuracy of 96%.

Alongside this, the bidirectional encoder representations from transformers (BERT) models, which are machine learning frameworks designed to analyse the context and meaning of language, performed well when paired with other models:

- BERT + Artificial Neural Networks (ANN) achieved an accuracy of 93.55%.

- BERT + Multilayer Perceptron (MLP) achieved an accuracy of 93.67%.

These results demonstrate that AI tools have a lot of potential to be used for hate speech detection on social media, with accuracies over 90%. But what about their explainability?

How explainable are the AI tools?

The AI tools output a number which indicates the probability that the text is hateful or not. The XAI tools reveal how much weight each word in the text has in the number depicting the probability that it is hate speech. The predictions are counted as matches if any of the word predictions overlap with the rationales annotated by humans.

Where the BERT tools excelled was in terms of their explainability. For these, the authors found that there was good performance overall in terms of ERASER, which refers to evaluating rationales and simple English reasoning. This is the benchmark for measuring the explainability of AI models.

Using XAI tools and understanding how they achieve their outputs allows users to ensure the tools work as intended and are not achieving the desired outputs in incorrect ways. The latter could lead to the tools working on initial datasets and then failing to function correctly when implemented on live data.

Furthermore, since hate speech detection can have serious consequences, understanding the tools and ensuring they work correctly will only benefit users on social media platforms and ideally reduce the negative mental health effects of them.

Tackling hate speech online using XAI

Social media has a range of benefits and negative consequences. Hate speech can spread online and impact individuals or groups of people, with effects extending outside of online spaces.

AI tools are being implemented in hate speech detection, but often not using explainable artificial intelligence. By using XAI, these tools can be implemented more efficiently and also transparently, to help build trust in their use.

Read or submit innovative research

MDPI publishes cutting-edge research relating to artificial intelligence and other technologies that could help us tackle the world’s most pressing issues.

Moreover, MDPI makes all its research immediately available worldwide, giving readers free and unlimited access to the full text of all published articles. It has over 400 journals dedicated to providing the latest findings, many of which also publish interdisciplinary research.

So, if you’re interested in submitting to Algorithms, why not look at its list of Special Issues?